When I discuss empirical studies of law with legal scholars and law students in Europe, whether is in Germany, Belgium, France or Italy, one comment I often hear is “but this is not law”. This is a remark worth exploring, because it tells us something important about the way academic lawyers conceive of their discipline and its object of study. I tell my bachelor students that there are basically two ways to approach the study of law. One is as product of society and as instrument of social control. Much of the political science law and courts literature as well as the law & economics literature follow this approach. These literatures are concerned with the factors determining the emergence of legal rules and the impact these rules have on behaviour. The goal of the research is to uncover causal relations or make predictions, sometimes with a view to making policy recommendations. Continental legal scholars (common law folks tend to be more pragmatic and more eclectic) often feel inclined to say that this is not what their discipline is about and are thus led to reject the findings of this strand of research as irrelevant to their work. Yet I also tell my students that you can approach and study the law as a discourse. Judges, legal counsels and law professors produce texts, not only to explain but also to persuade. Legal discourse in this sense can be studied as a specialised form of rhetoric. In fact, there is a long scholarly tradition going back all the way to Aristotle’s treaty on rhetoric that has embraced this research programme. Argumentation, discourse and texts are things that speak to most lawyers. So, in my experience, they are much less likely to dismiss this approach to legal research as irrelevant to what they do. Legal scholars conceive of their discipline as primarily dealing with words and as concerned with relations among texts and the classification of legal materials. This is why lawyers of all stripes should be interested in text-mining. Text-mining is a suite of techniques to explore, classify and scale textual data. In this post, I show how text-mining methods can be used to classify and construct summaries of large collections of legal documents as well as to extract jurisprudential positions from court opinions. In other words, to do what legal scholars already do, but with the help of the computer and on a much bigger scale.

Some Basics of Text-Mining

Text-mining methods do not parse texts quite the same way the human brain does. Rather, they operate through complexity reduction. Let’s assume, for the sake of exposition, that we have a corpus with two texts, Text 1 and Text 2. In most text-mining applications these texts will be reduced to sequences of word counts. The sequence representing a document will also contain some zeros. For Text 1 the zeros will represent all the words that appear in Text 2 but not in Text 1, and vice-versa (hence for a large corpus, the sequence of word counts representing a document will contain a lot of zeros). This modus operandi may strike one as a crude simplification. Language is not only about words, but also about syntax and context. This is true. But, as we shall see, words convey a lot of information, and techniques relying on this simplifying assumption produce useful and interesting results.

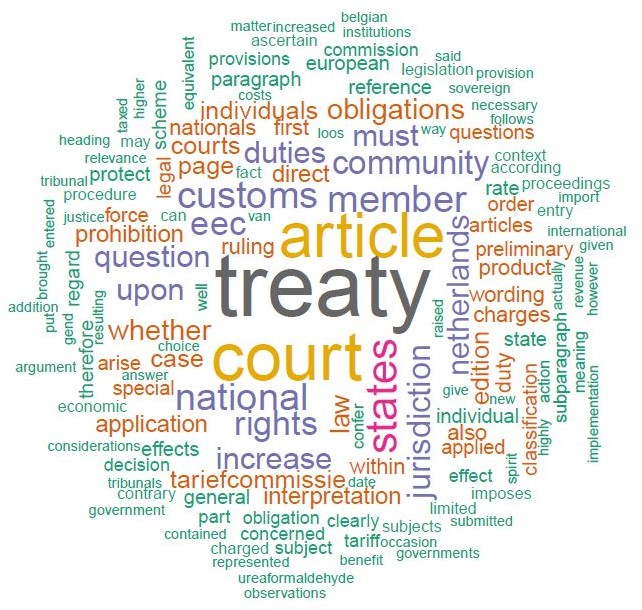

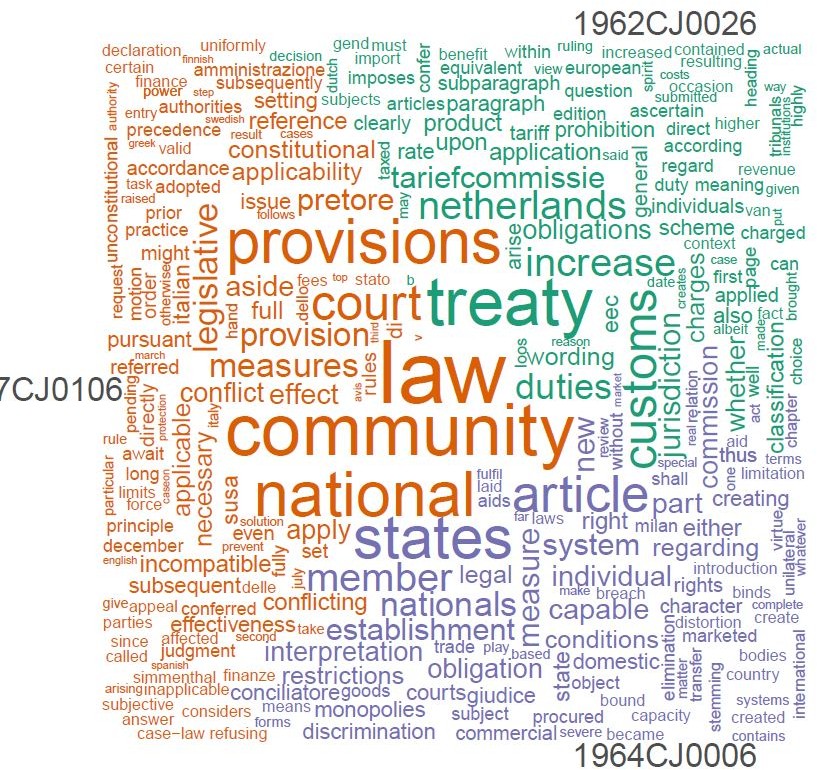

Wordclouds are one familiar way to represent word frequencies in a text or a collection of documents. Figure 1 plots the most frequent words in the CJEU Van Gend en Loos ruling. Word size is proportional to the word’s counts in the sequence representing the text. A slightly more sophisticated wordcloud is the comparison cloud appearing in Figure 2. Plotted are not the most frequent words but the words that are most distinctive of the three rulings, Van Gend en Loos, Costa v. ENEL and Simmenthal.

Classifying Legal Documents: Topic Model of the CJEU Case Law

A more sophisticated technique to explore and classify legal texts is topic modelling. Imagine you have a large amount of legal texts and you want to discover what issues they address. Topic modelling helps you do this using probabilities. In topic modelling, a topic is modelled as a probability over words and a document as probability over topics. To generate the topics, the algorithm tries to find which probabilities are most likely to have generated the observed documents. Sounds complicated? Let’s work our way through an example. Using a simply computer script, together with Dr Nicolas Lampach from the EUTHORITY Project we began by scrapping the entire universe of preliminary rulings rendered by the CJEU from 1961 to 2016 from the EUR-Lex website. This represents tens of thousands of pages of text. Of course, nobody can sift through all these decisions to find out what theme they address. It’s here that topic modelling comes to the researcher’s rescue.

After cleaning the scrapped texts (texts scrapped from websites often contain HTML tags and other things you don’t want to keep), removing numbers, punctuation and symbols (just as you do to construct a wordcloud), we converted all the texts in sequences of word counts. (Technically, the result of the conversion is called a “document-term matrix”, where each row is a document and each column is a word and each cell indicates the word’s frequency in the corresponding document.) In order to compute the topic model, we need to specify the number of topics. This choice depends on how detailed we want the resulting picture to be.

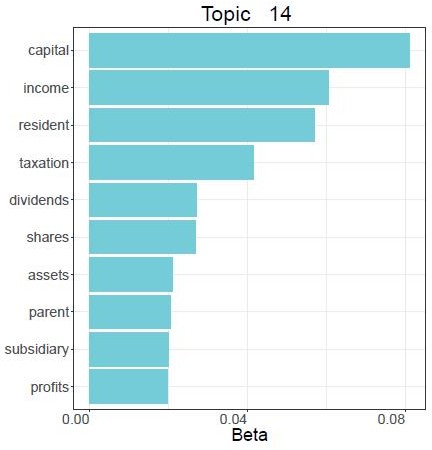

Figure 3 illustrates one of the topic generated by a model for which we set the number of topics at 25. The topic is represented by its 10 most distinctive words (the higher the beta value, the more characteristic of the topic the word is). Looking at these “keywords”—which, it is essential to understand, are not chosen by the researcher but emerge from the analysis—we may summarize this topic as corporate taxation.

To check that this is a correct interpretation of the topic, we can inspect the decision which, according to the model, has the highest proportion of this topic. In that case, it turns out to be Test Claimants in the FII Group Litigation v Commissioner of Inland Revenue, a 2012 Grand Chamber Ruling. Here is a quote from the first ruling:

The High Court of Justice of England and Wales, Chancery Division, seeks, first, to obtain clarification regarding paragraph 56 of the judgment in Test Claimants in the FII Group Litigation and point 1 of its operative part. It recalls that the Court of Justice held, in paragraphs 48 to 53, 57 and 60 of that judgment, that national legislation which applies the exemption method to nationally-sourced dividends and the imputation method to foreign-sourced dividends is not contrary to Articles 49 TFEU and 63 TFEU, provided that the tax rate applied to foreign-sourced dividends is not higher than the rate applied to nationally-sourced dividends and that the tax credit is at least equal to the amount paid in the Member State of the company making the distribution, up to the limit of the tax charged in the Member State of the company receiving the dividends.

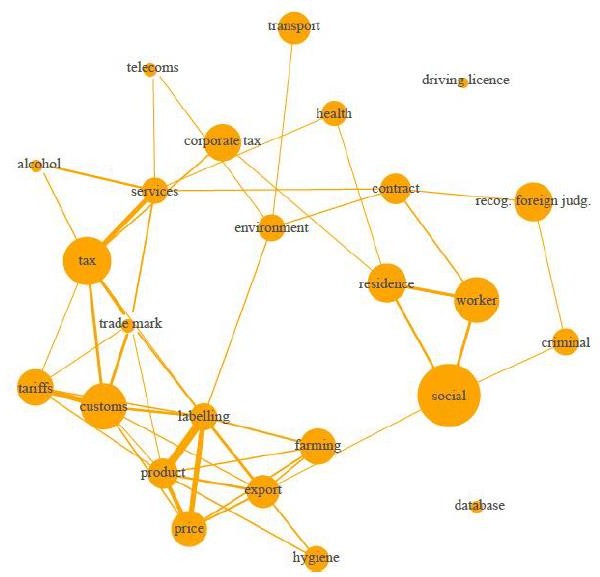

Really looks like corporate taxation, doesn’t it? After labelling the 25 topics, we can represent the case law as a network in which node size represents overall topic proportion while edge thickness correspond to the weighted number of shared words.

Figure 4 suggests that internal market and tax issues represent a big chunk of what the CJEU does. However, social rights, residence right and the recognition of foreign judgments (private international law) also make for a substantive share of the cases on which the Luxembourg judges sit.

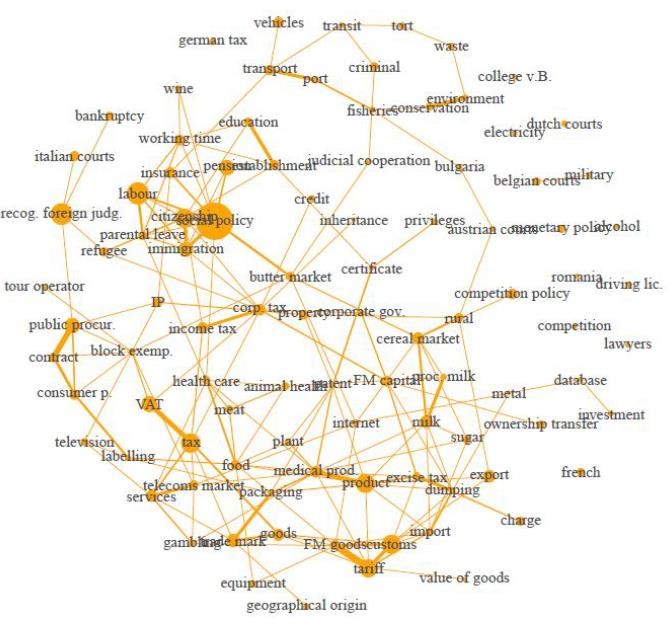

You think 25 categories is too few to get a good sense of issue prevalence in the Court’s case law? How about a topic model with 100 categories? Looking at Figure 5, we see that such a topic provides a more detailed picture, although we find the same themes (internal market in the lower-right region, social and immigration issues in the left region).

It is also possible to construct dynamic topic models to study the evolution of case law over time or “litigant” topic model to study how issues vary across litigant types. This technique can also be applied to the analysis of law review articles, blogposts or textbooks. Imagination is the limit!

Opinion Mining: Correspondence Analysis of Bundesverfassungsgericht Rulings on European Integration

Topic modelling is most useful when the issues are unknown—we don’t know what the documents are talking about, which is what we want to discover. Sometimes, however, the issue is known and what we want to know is the stance the documents are taking on the issue. For example, we know that the German Federal Constitutional Court, the Bunderverfassungsgericht, has issued a number of rulings on European integration. These rulings are easy to identify and are likely to get mentioned in any educated conversation about the evolution of the Court’s position on European integration: Solange, Maastricht, Lisbon, Honeywell and so on are really household names for students of EU law and German constitutional law alike. It is also widely recognised that the position of the German Court has changed over time, oscillating between an integration-friendly and a more Eurosceptic stance. Can we use text-mining methods to chart the evolution of the German Court’s position without having to read all these rulings? Correspondence analysis is a technique employed in a wide variety of domains (not just text-mining) which can be used for that purpose. Applied to a bunch of texts, correspondence analysis will seek to array the texts on dimensions that reflect variations in word usage. Thus texts with more similar patterns of word use will be assigned positions closer to each other on the dimensions computed by the algorithm.

For this exercise, I identified and downloaded 37 Bundesverfassungsgericht opinions associated with European integration. I then proceeded to remove the parties’ arguments (for the majority opinions, this means I only kept the Begründung). As with the topic models, I cleaned the texts, removed punctuation, numbers and symbols and set all words to lower case. I also applied stemming to ensure that “demokratisch”, “demokratische”,”Demokratie” would treated as the same concept. Finally, I converted the corpus to a term-document matrix.

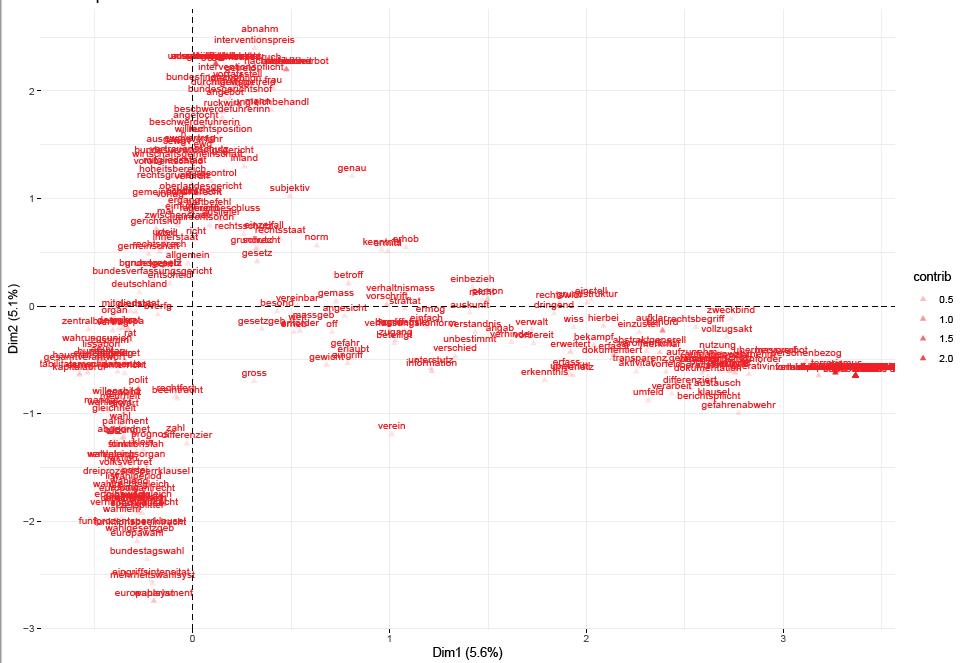

Correspondence analysis needs 36 dimensions to account for all the variance in word usage (long documents can indeed diverge on multiple dimensions). Figure 6 plots the terms that make the strongest contributions to the first two dimensions. That is, the terms that account for the largest percentage of variance. Doing so helps interpret the dimensions before looking at the positions assigned to the texts.

Words such as “Straftat”, “Gefahrenabwehr”, “Terrorismus“ suggest that the first dimension is more about criminal law, which is confirmed by the fact that the 2013 Anti-terror decision is assigned the highest score on that dimension. Now, if we look at the second dimension, we see words relating to the internal market (“Abnahme”, “Interventionspreis”) in the uppermost region of the plot and words relating to democracy and elections (“Bundestagswahl”, “politisch”, “Parlament”, etc.) in the lower regions. For those who know the case of the German Court, democracy has often been used as an argument to limit integration (think of the Maastricht ruling for example). So this is likely to be our dimension of interest. Let’s plot the decisions’ estimated position over time for this dimension.

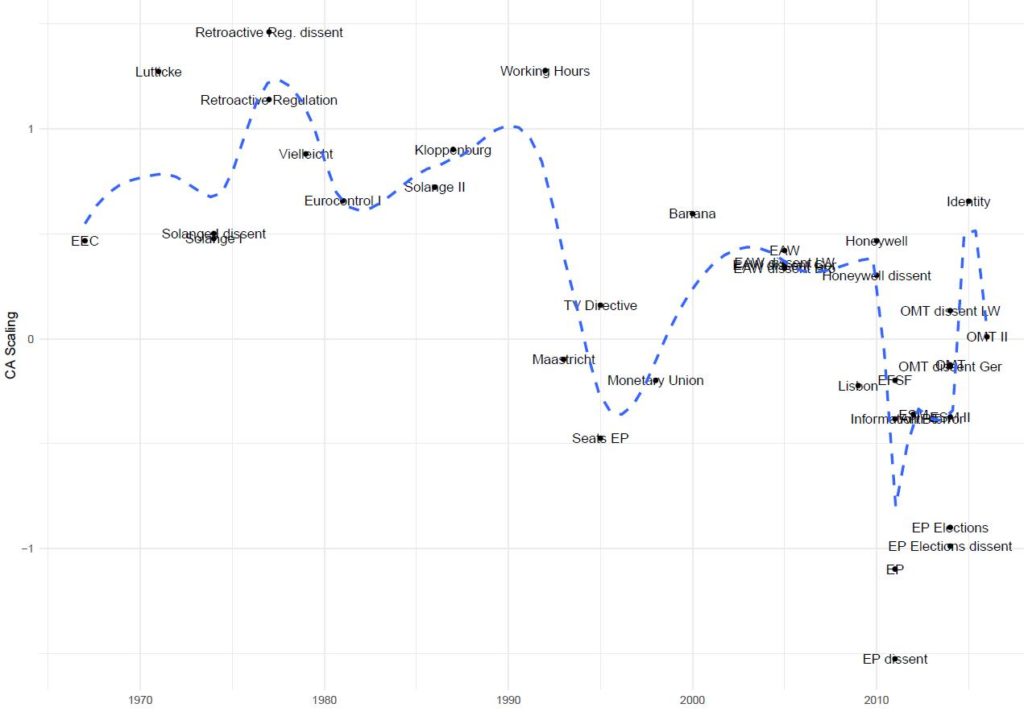

Figure 7 illustrates the results. I have added a loess curve to the plot to make the evolution of the case law easier to follow. If we interpret the dimension as integration-friendliness, most EU law scholars I know would expect Solange II to come out as more integration-friendly than Solange I, just as we would expect Banana and Honeywell to be assigned less Eurosceptic positions than Lisbon and Maastricht. The estimated positions are consistent with these expectations. Note also that the dissent of Judge Landau in Honeywell is assigned a more Eurosceptic position than the majority opinion whereas the reverse applies for the dissent of Judge Lübbe-Wolff in the OMT case, as one would expect.

Of course, owing to the simplifying assumptions of these text-mining techniques, one may quibble about the position of this or that particular position (shouldn’t the dissents in the 2005 EAW ruling not be assigned a more integration-friendly position than the majority opinion?). However, even for the researchers who think that you can’t be sure unless you read all the judgements (good luck with that!), correspondence analysis provides a useful first approximation.

Going Forward: Augmented Doctrinal Analysis

This is a snippet of the many things you can do with text-mining in law. I hope that I managed to convince some of those who are sceptical of empirical methods that text-mining can also contribute to the study of legal doctrines and legal discourse. If not, then I ask them to wait for my next post.